In the rapidly evolving world of artificial intelligence (AI), two tech giants, Intel and NVIDIA, are making significant strides. Both companies are optimizing their ecosystems for Meta’s Llama 3, a large language model (LLM), each leveraging their unique strengths and product offerings.

1. Intel’s Approach: A Blend of Performance and Simplicity

Intel is known for its role in making microprocessors that are found in most personal computers. And the company has validated its AI product portfolio for the first Llama 3 8B and 70B models across various products, including Intel® Gaudi® accelerators, Intel® Xeon® processors, Intel® Core™ Ultra processors, and Intel® Arc™ graphics.

Intel’s approach to AI is centered around reducing latency and delivering impressive performance for Llama 3. Latency, the delay before a transfer of data begins following an instruction for its transfer, is a critical factor in the performance of AI models.

Intel’s initial testing and performance results for Llama 3 8B and 70B models use open-source software, including PyTorch, DeepSpeed, Intel Optimum Habana library, and Intel Extension for PyTorch. Open-source software is software with source code that anyone can inspect, modify, and enhance. This open-source approach fosters a collaborative environment, driving innovation in the AI space.

By using open-source software, Intel is not only able to tap into the collective intelligence of developers worldwide, but also contribute to the AI community. This approach aligns with Intel’s mission of creating world-changing technology that enriches the lives of every person on earth.

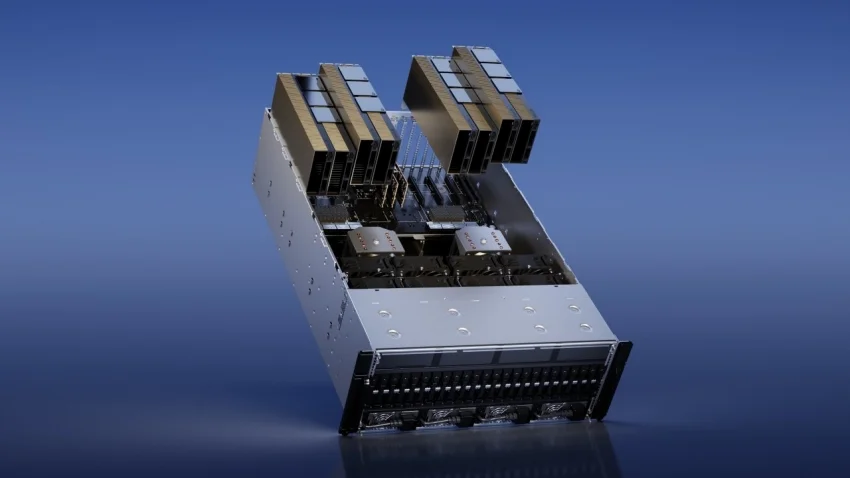

2. NVIDIA’s Strategy: Harnessing the Power of GPUs

NVIDIA is focusing on GPU-accelerated computing for AI. This approach leverages the parallel processing capabilities of GPUs, which are particularly suited for the heavy computational requirements of training large language models like Llama 3.

Meta engineers trained Llama 3 on a computer cluster with 24,576 NVIDIA H100 Tensor Core GPUs. These GPUs are part of NVIDIA’s latest generation of data center GPUs designed specifically for AI workloads. They are linked with an NVIDIA Quantum-2 InfiniBand network, a high-speed, low-latency network that enables efficient data transfer between GPUs.

This high-performance computing environment allows for the efficient training of large language models like Llama 3, enabling faster iterations and more rapid development of AI applications.

But NVIDIA’s strategy goes beyond just providing the hardware for training AI models. They are also making Llama 3 accessible across various platforms, from the cloud and data center to edge and PC. This wide accessibility empowers developers, researchers, and businesses to innovate responsibly across a wide variety of applications.

In the cloud and data center, NVIDIA GPUs provide the computational power needed to train and run large language models like Llama 3. At the edge, NVIDIA’s Jetson platform provides a solution for deploying AI models in devices like robots and drones. And on the PC, NVIDIA’s GeForce GPUs enable developers to experiment with AI on their personal computers.

By providing a comprehensive ecosystem for AI, from hardware to software, NVIDIA is not only advancing the state of AI but also democratizing access to AI tools and technologies. This strategy aligns with NVIDIA’s vision of a world where AI is accessible to everyone and can be used to solve the world’s most pressing problems.

The Shared Vision: Democratizing AI

Both Intel and NVIDIA share a common vision: to democratize AI. They are investing in the software and AI ecosystem to ensure their products are ready for the latest innovations in the dynamic AI space. By optimizing their ecosystems for Llama 3, they are paving the way for the next generation of AI applications.

And the efforts of Intel and NVIDIA to optimize their ecosystems for Llama 3 will have a profound impact on end users. Faster processing times, reduced latency, and improved performance mean that AI applications will become more efficient and effective. This could revolutionize fields such as natural language processing, machine learning, and data analysis.

The Future of Llama 3

As for the future of Llama 3, it’s bright. Despite the presence of competitors, the model’s open nature and the backing of tech giants like Intel and NVIDIA ensure its continued relevance. As Meta introduces new capabilities, additional model sizes, and enhanced performance, Intel and NVIDIA will continue to optimize performance for their AI products to support this new LLM.

Indeed, the collaboration between Intel, NVIDIA, and Meta on Llama 3 represents a significant step forward in the field of AI. It’s a testament to the power of collaboration and innovation in driving technological advancement. As we look to the future, one thing is clear: the potential of AI is vast, and we’re just beginning to scratch the surface.

Furthermore, as these giants embrace cutting-edge solutions for enhanced performance, a parallel narrative unfolds in Apple’s ambitious quest to mitigate emissions by 2030. Can Apple achieve this monumental feat? Delve deeper into the sustainability saga that echoes alongside the strides in computing prowess.